Recovering the Homographies

A homography is given by a transformation sending $v_{1} = \begin{bmatrix} x \\ y \\ 1 \end{bmatrix}$ to $v_{2} = \begin{bmatrix} x^{\prime} \\ y^{\prime} \\ 1 \end{bmatrix}$ mod scaling through a matrix:

\begin{equation*} c \begin{bmatrix} x^{\prime} \\ y^{\prime} \\ 1 \end{bmatrix} = \begin{bmatrix} h_{1} & h_{2} & h_{3} \\ h_{4} & h_{5} & h_{6} \\ h_{7} & h_{8} & 1 \end{bmatrix} \begin{bmatrix} x \\ y \\ 1 \end{bmatrix} \end{equation*}

Multiplying everything out, we get:

\begin{align*} cx^{\prime} &= h_{1}x + h_{2}y + h_{3} \\ cy^{\prime} &= h_{4}x + h_{5}y + h_{6} \\ c &= h_{7}x + h_{8}y + 1 \end{align*}

and substituting:

\begin{align*} (h_{7}x + h_{8}y + 1)x^{\prime} &= h_{1}x + h_{2}y + h_{3} \\ (h_{7}x + h_{8}y + 1)y^{\prime} &= h_{4}x + h_{5}y + h_{6} \end{align*}

will give the system of equations:

\begin{align*} h_{1}x + h_{2}y + h_{3} + 0h_{4} + 0h_{5} + 0h_{6} - h_{7}xx^{\prime} - h_{8}yx^{\prime} - 1x^{\prime} &= 0 \\ 0h_{1} + 0h_{2} + 0h_{3} + h_{4}x + h_{5}y + h_{6} - h_{7}xy^{\prime} - h_{8}yy^{\prime} - 1y^{\prime} &= 0 \end{align*}

This gives a way to solve for the entries of the matrix $H$ through a different linear equation $Ax = b$ where $A$ is a $2 \times 8$ matrix, $x$ is the vector containing the entries $h_1, \ldots, h_8$, and $b$ is the vector containing the values $x', y'$. This is extendable to include more points in our homography mapping and can be solved through least squares to find the projective mapping

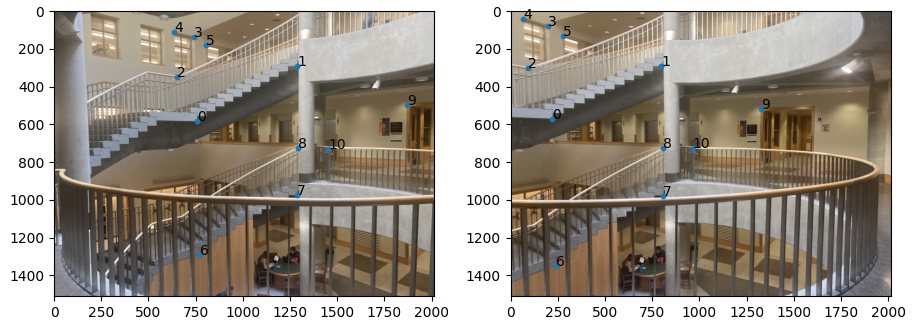

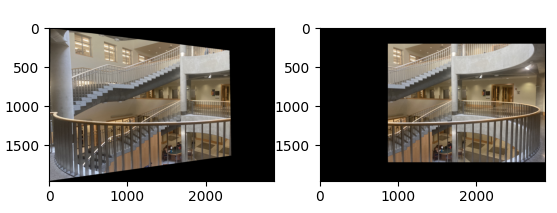

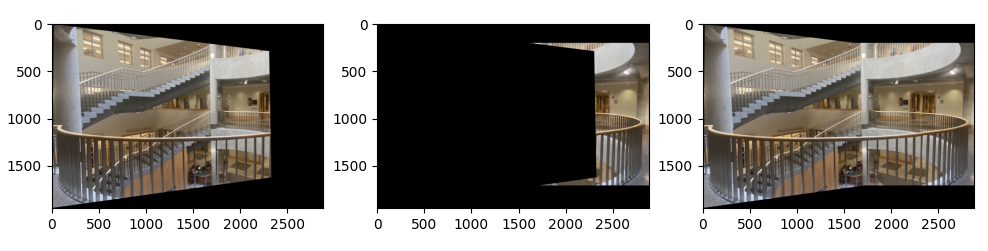

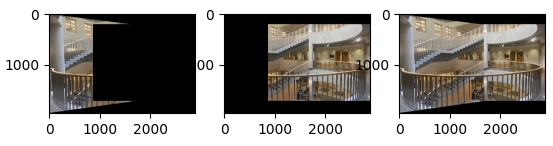

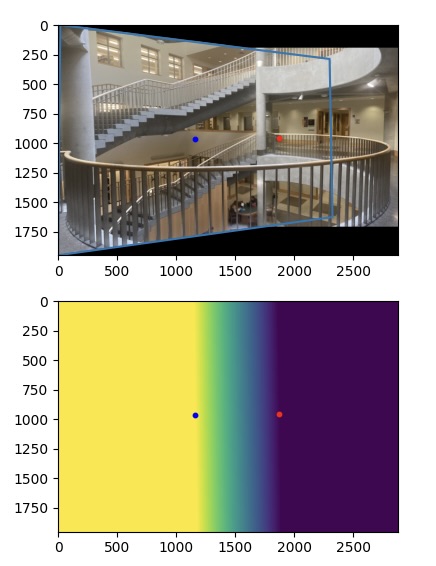

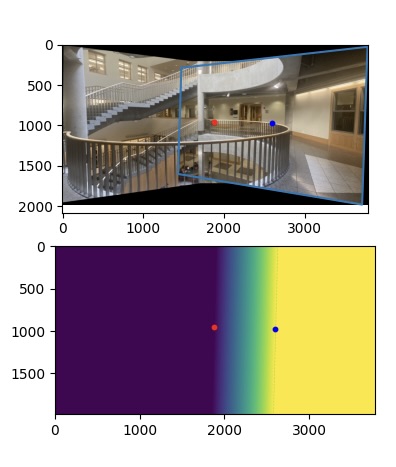

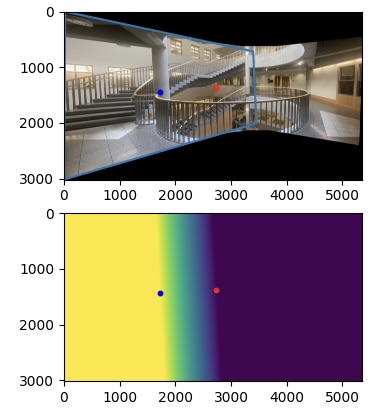

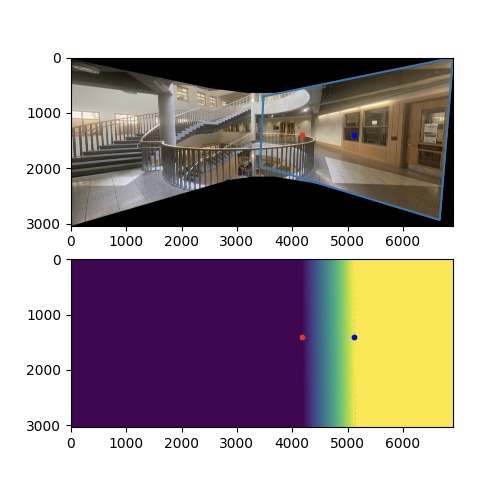

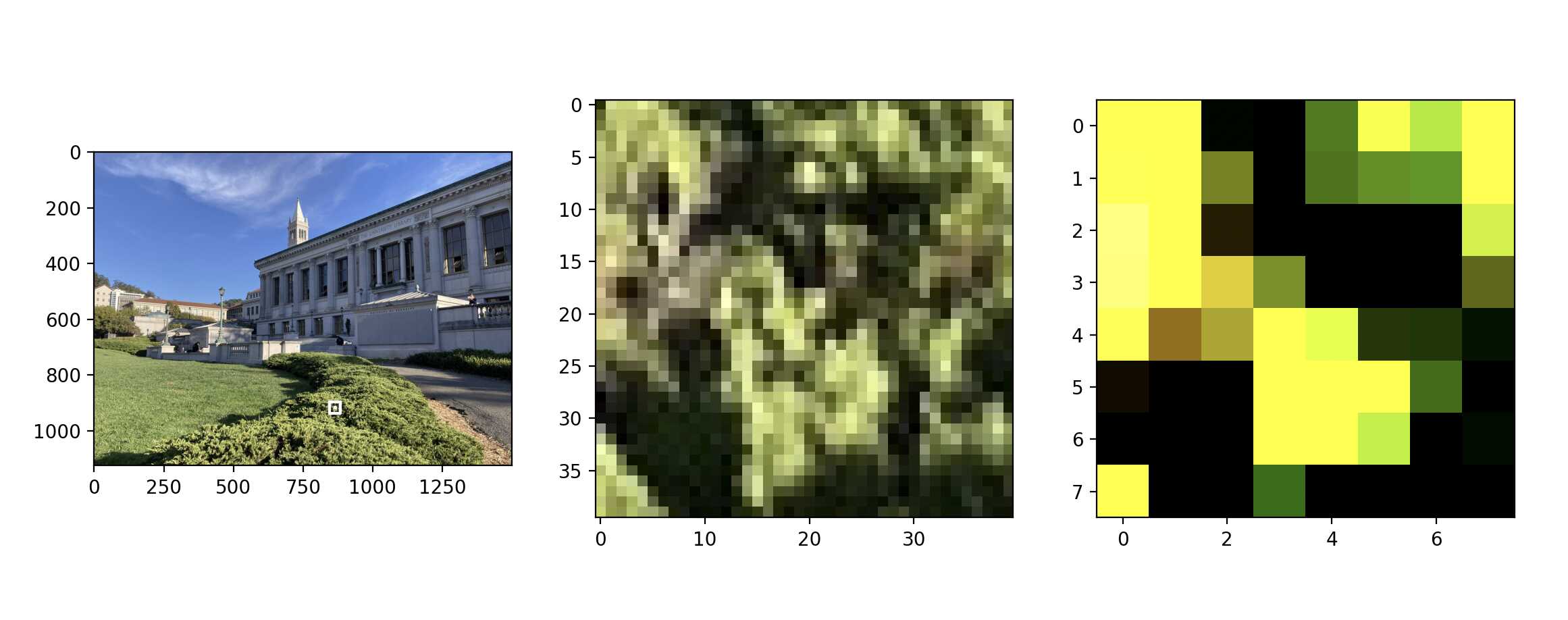

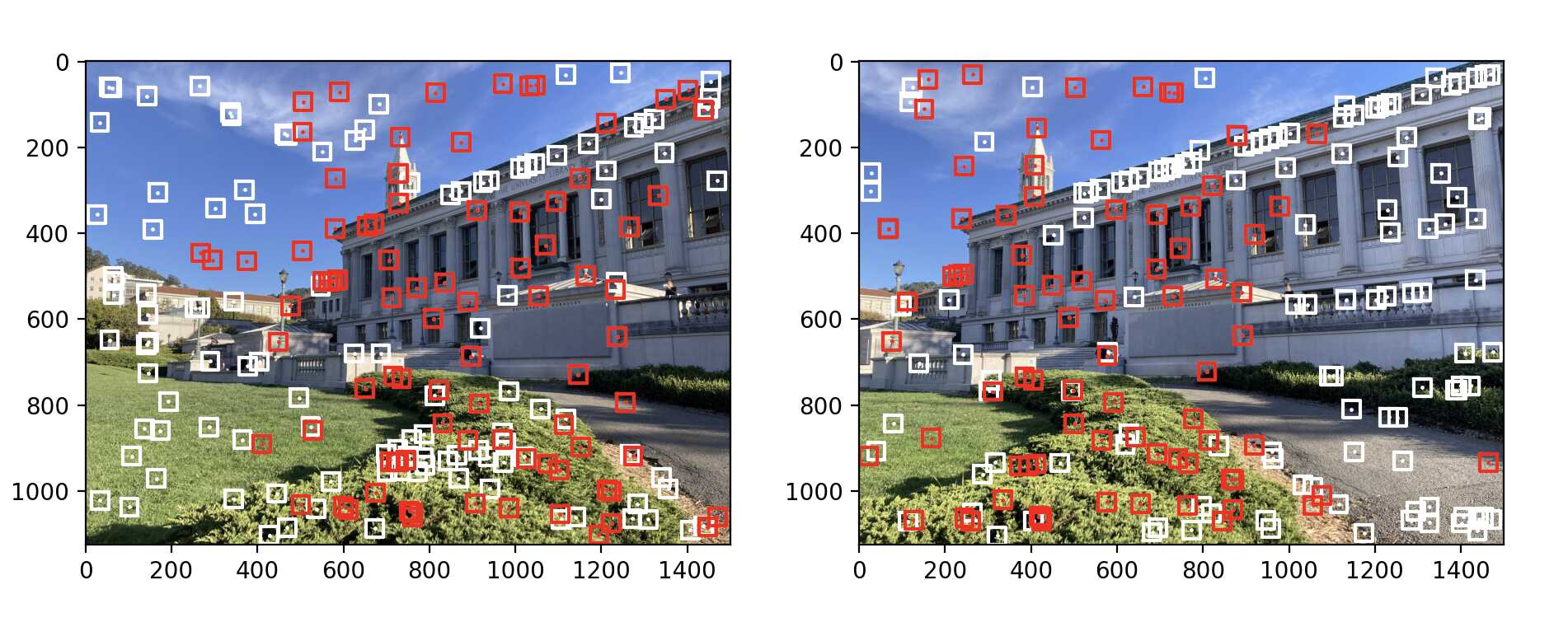

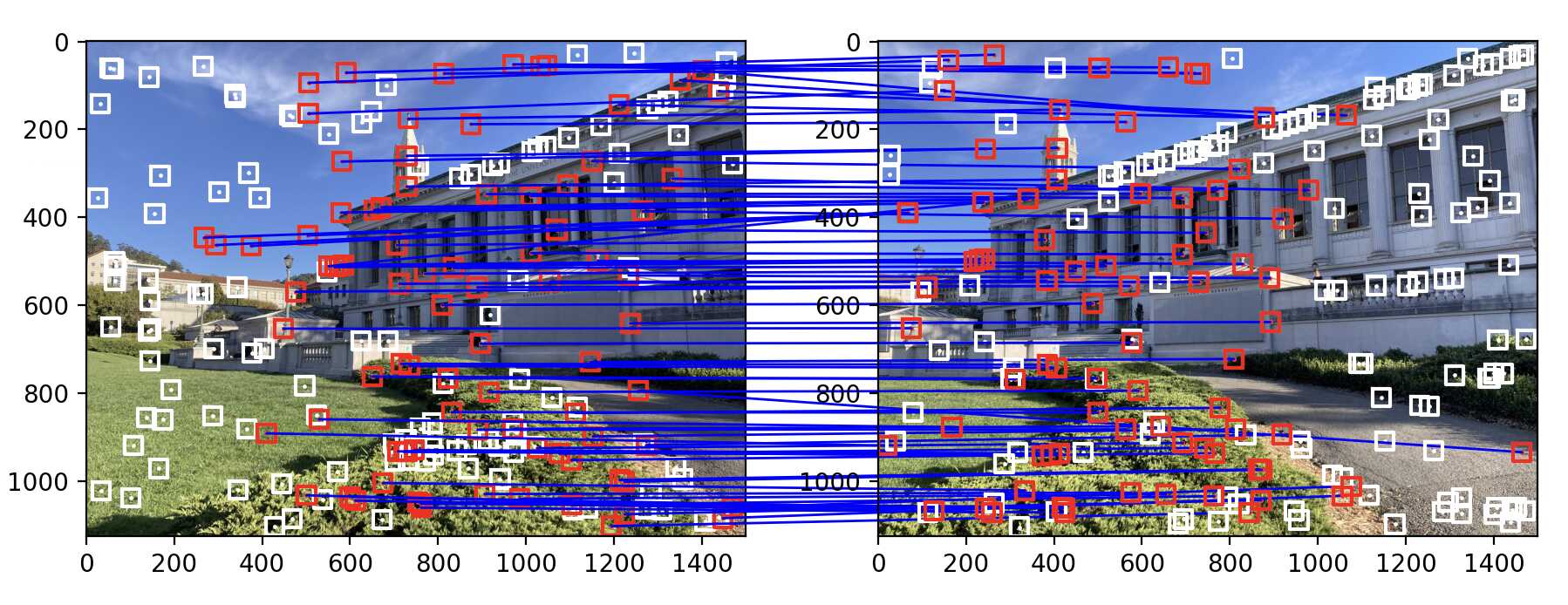

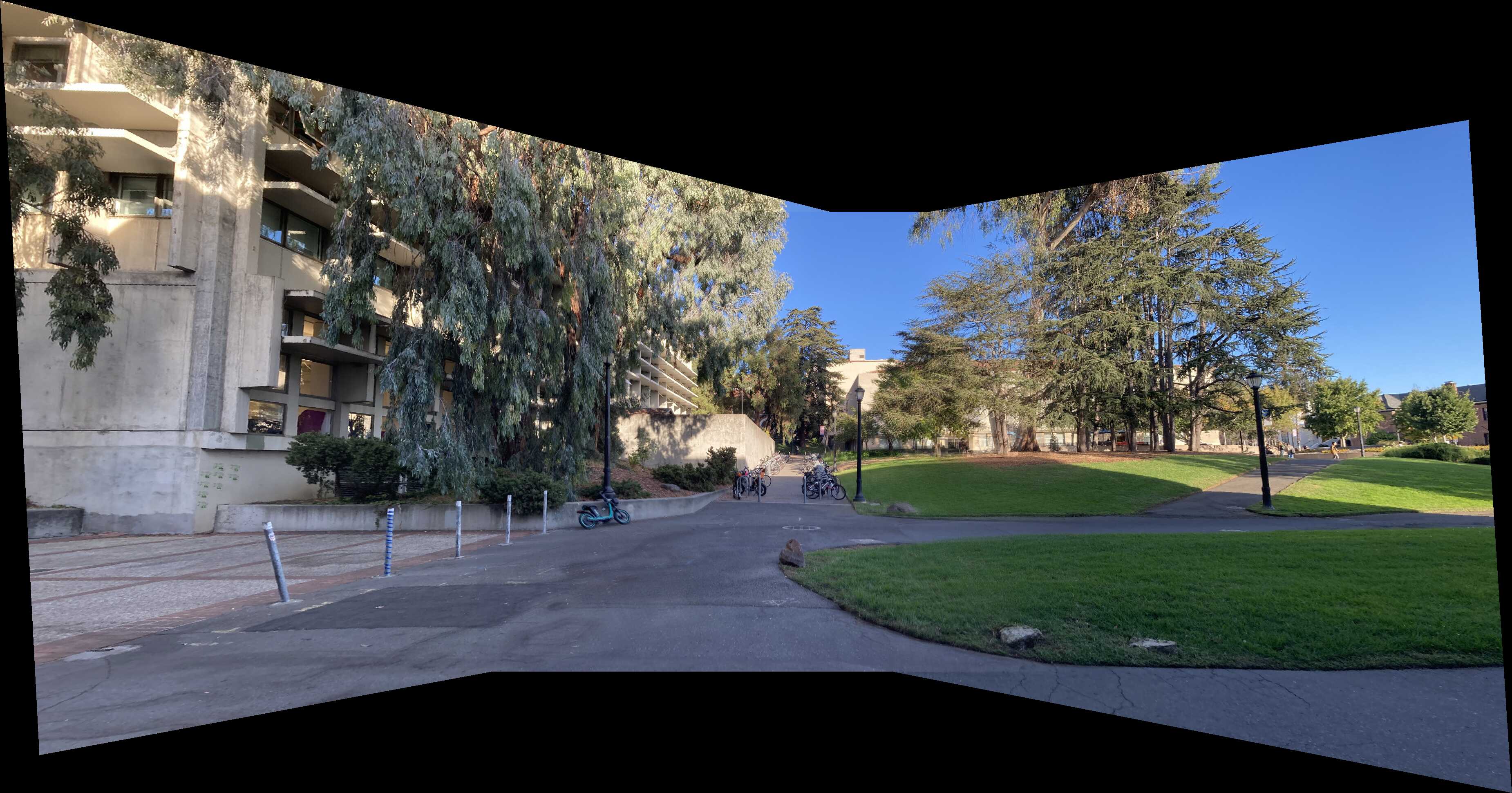

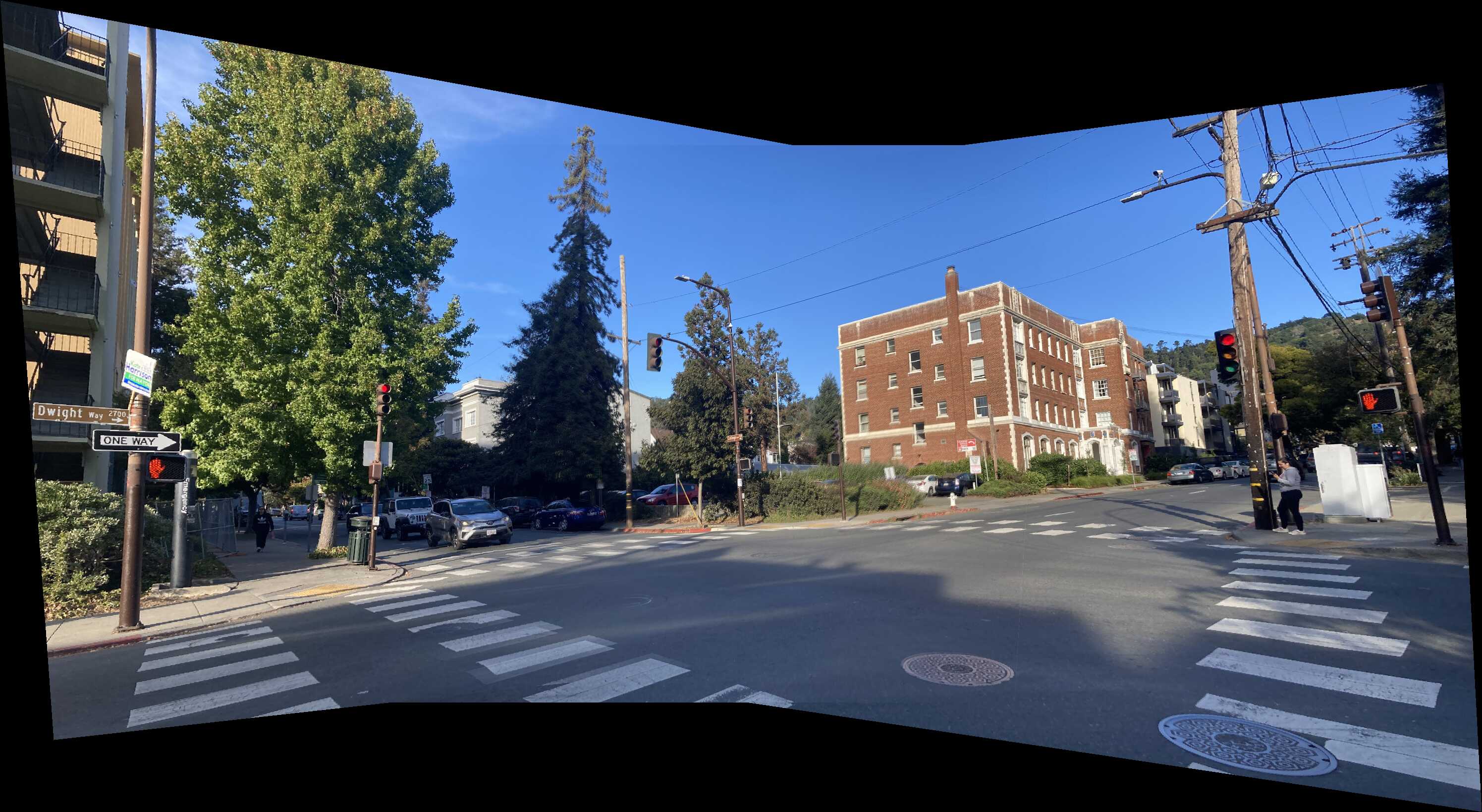

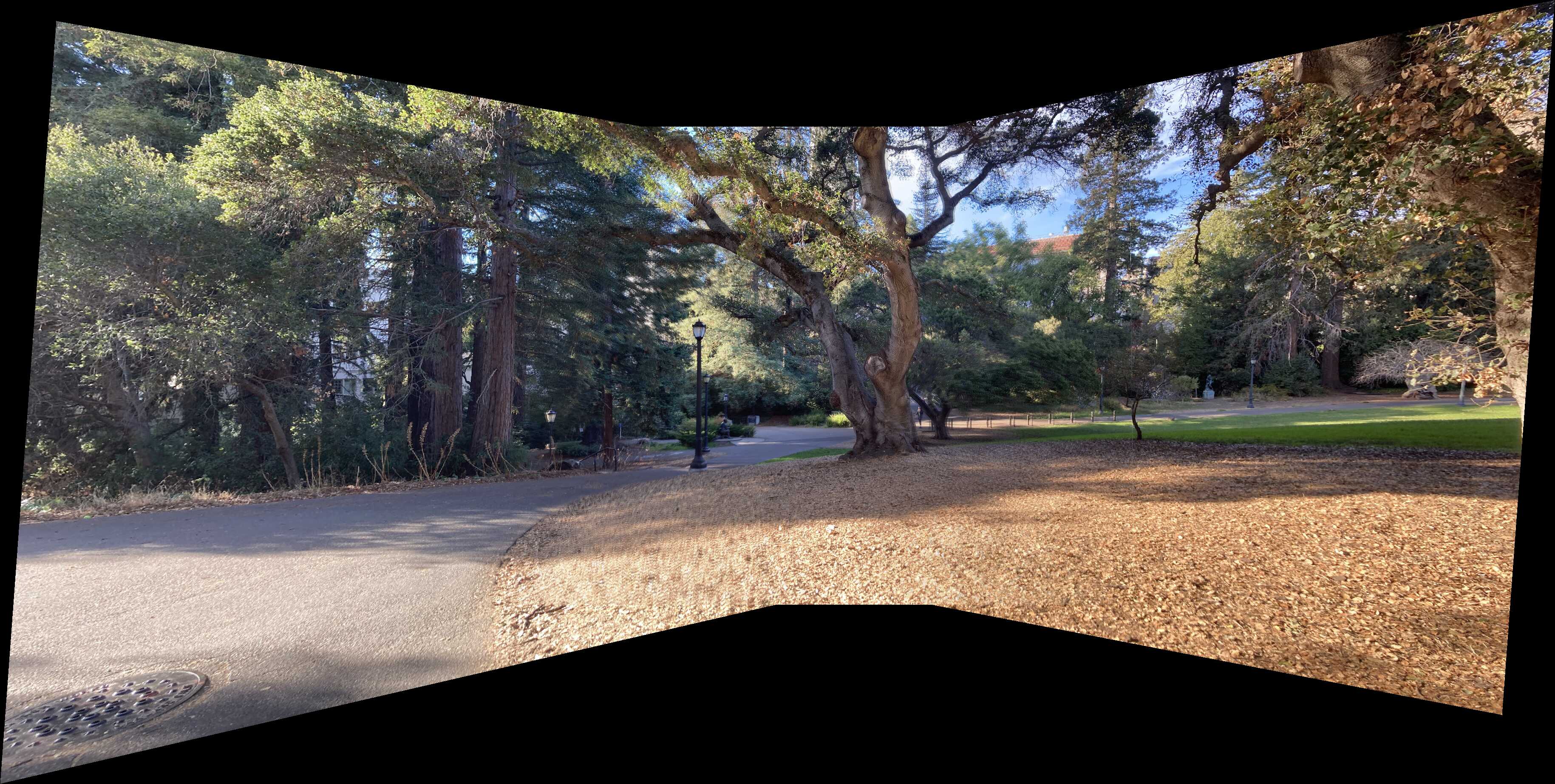

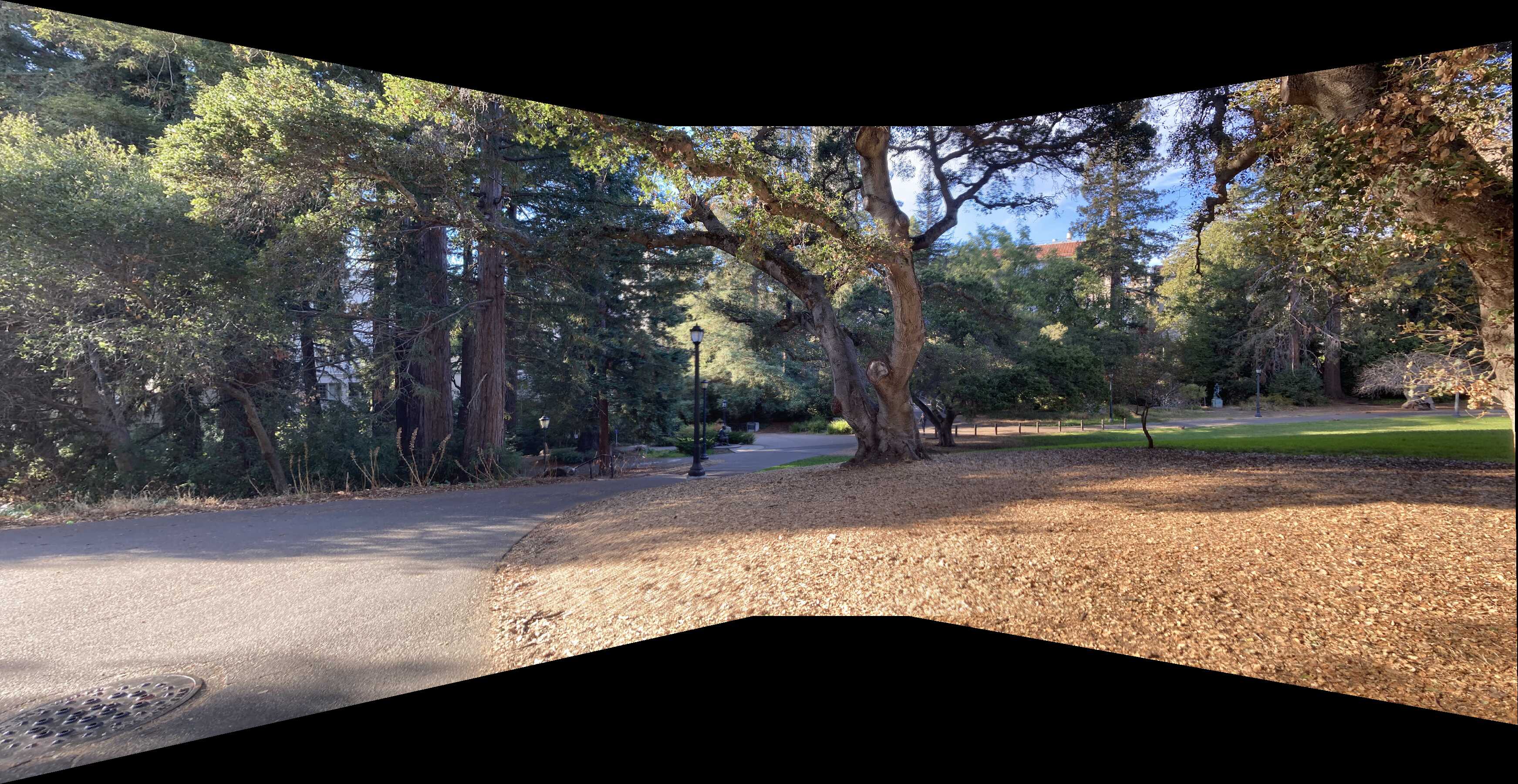

Here is an example of the point correspondences that I used to compute the homography