Filters for Edge Detection

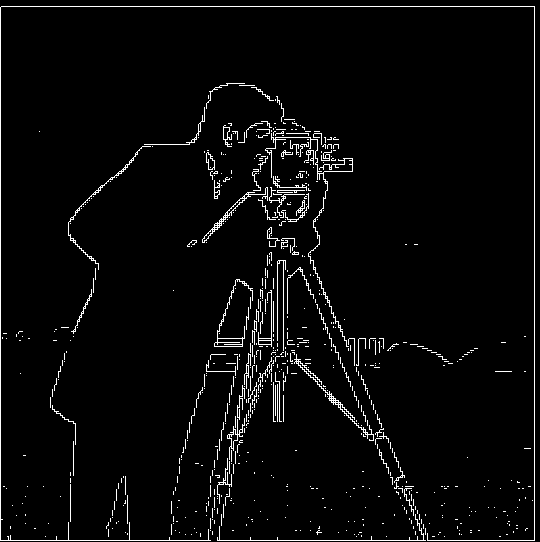

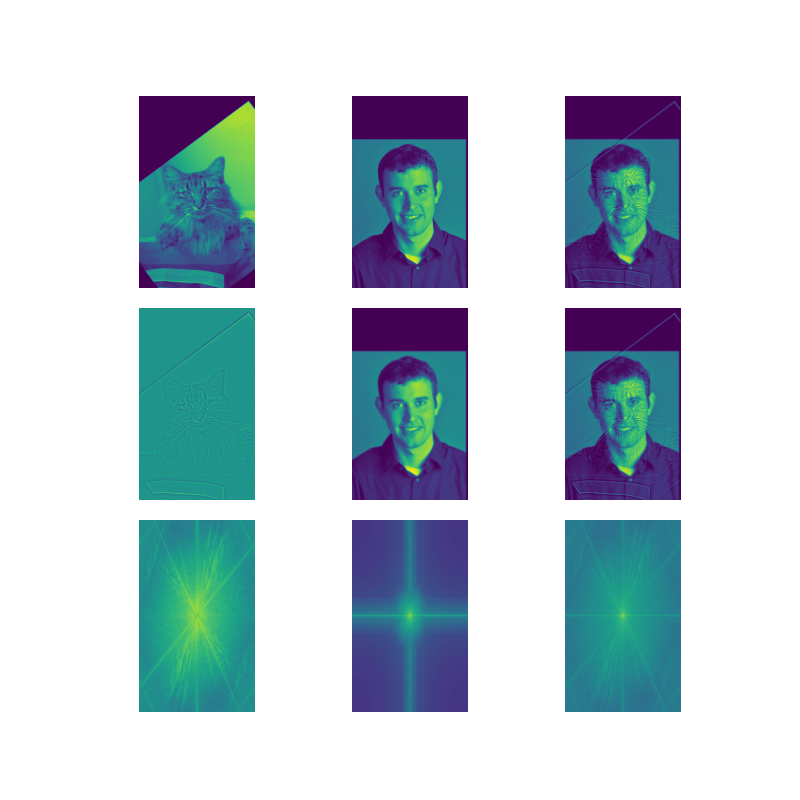

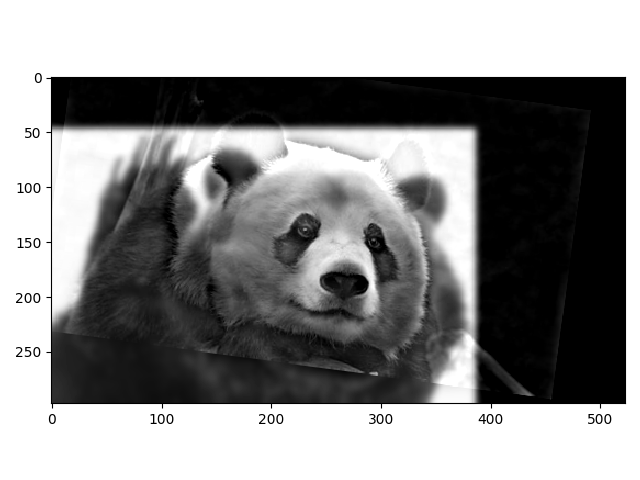

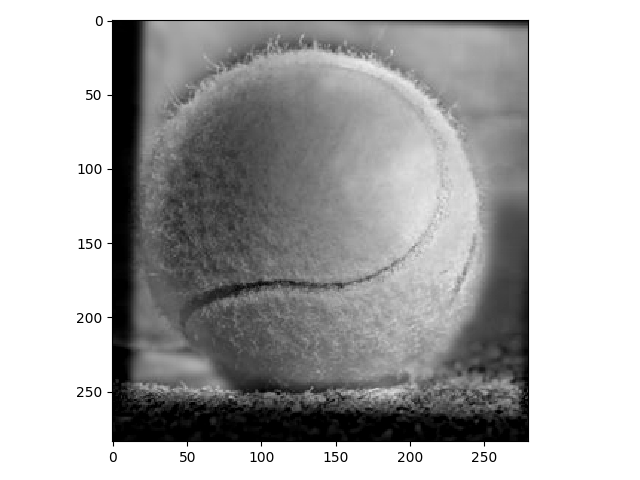

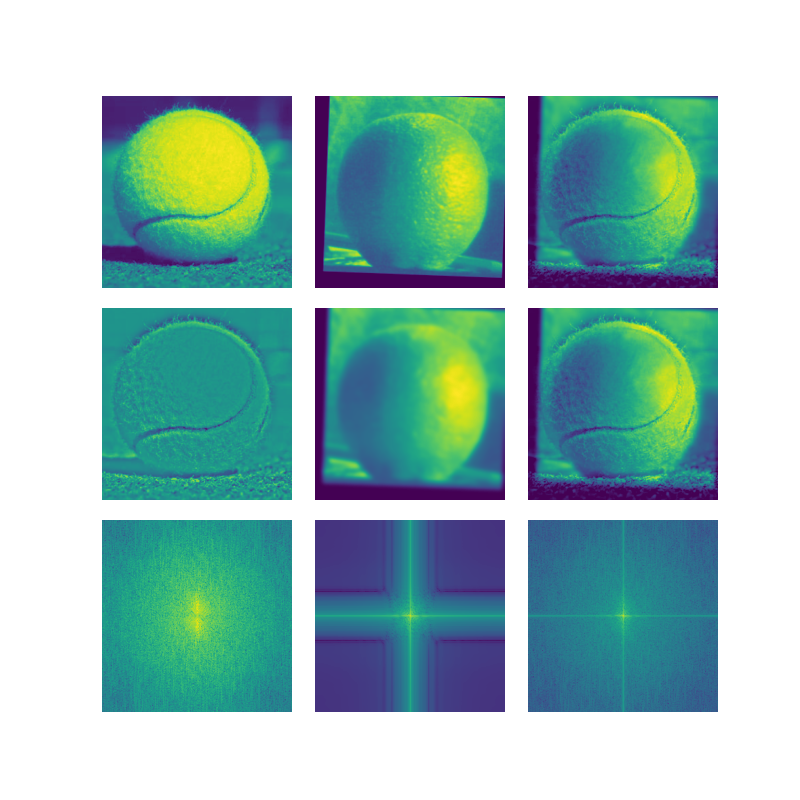

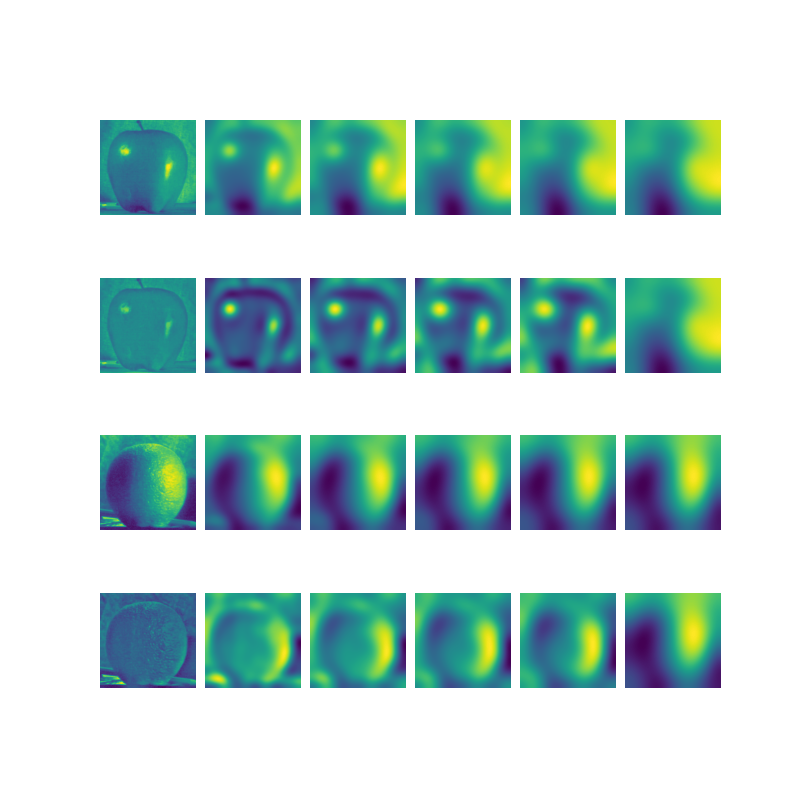

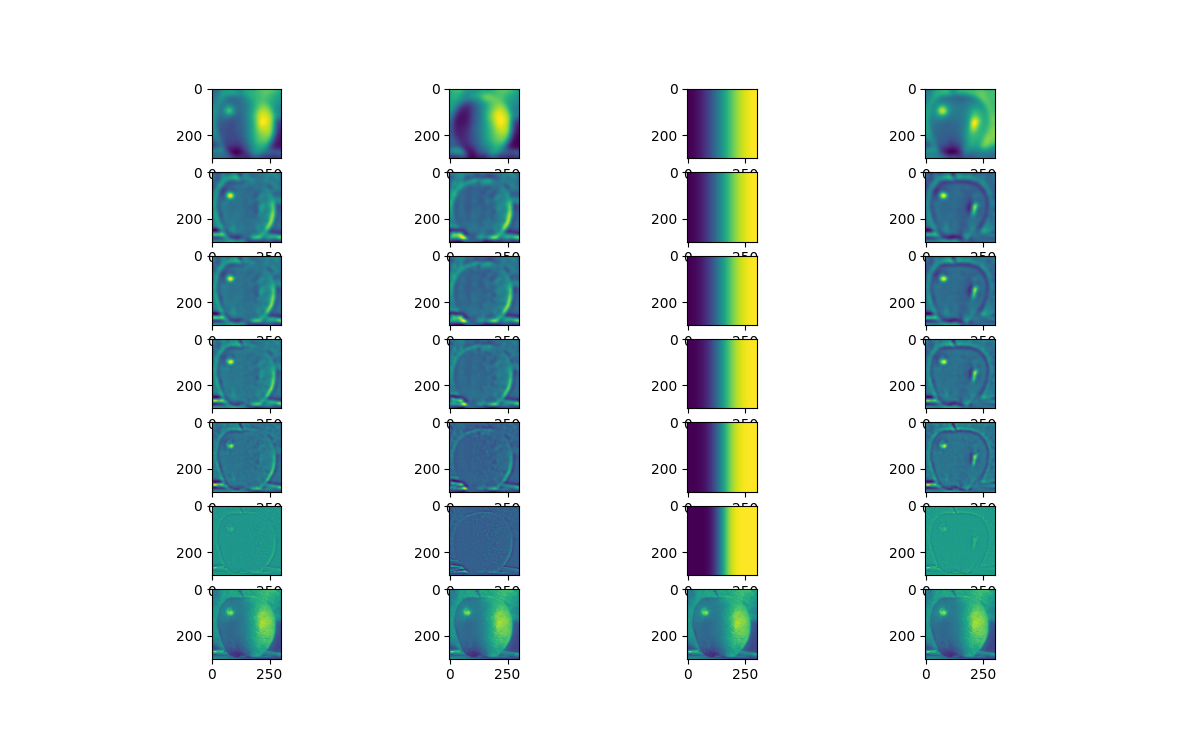

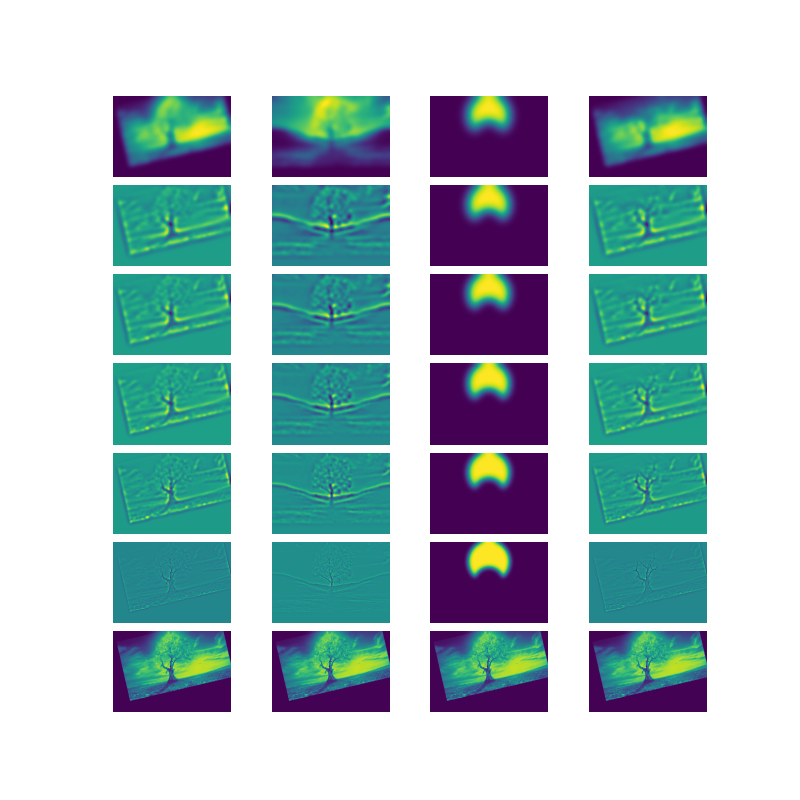

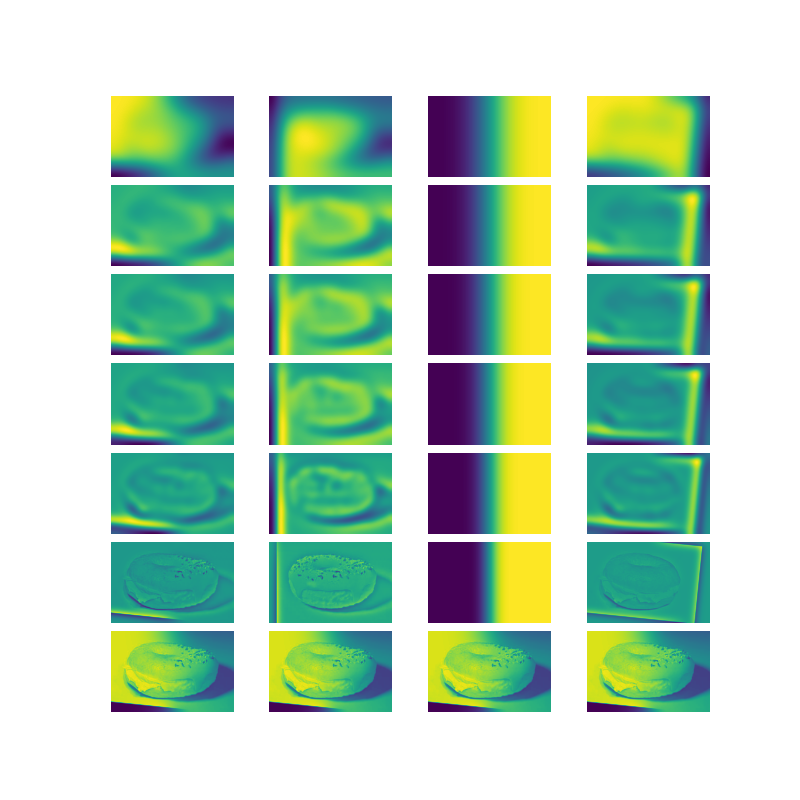

Detecting edges is detecting areas where the pixel values change abruptly, corresponding to the magnitude of the gradient at that pixel. The filter uses the kernel matrix\begin{equation*} \begin{bmatrix} -1 & 1 \end{bmatrix} \end{equation*}and\begin{equation*} \begin{bmatrix} -1 \\ 1 \end{bmatrix}\end{equation*}derived from the derivative:\begin{equation*}f_x(x, y) = f(x + 0.5, y) - f(x - 0.5, y) \end{equation*}These kernels are convolved with the image to get the \(x\)and \(y\)components of the gradient. We then take the euclidean norm between the \(x\) and \(y\) components to get the magnitude. To obtain an output in terms of either zeros or ones, I used a threshold as a cutoff value to determine if the pixel was part of an edge or not. To do this, I normalized the gradient magnitude image and compared to the threshold with\begin{equation*} \lvert\text{img}[i][j] \rvert < 3 \cdot \text{threshold}\end{equation*}I found that normalization was important for filters that might scale up the pixel values.

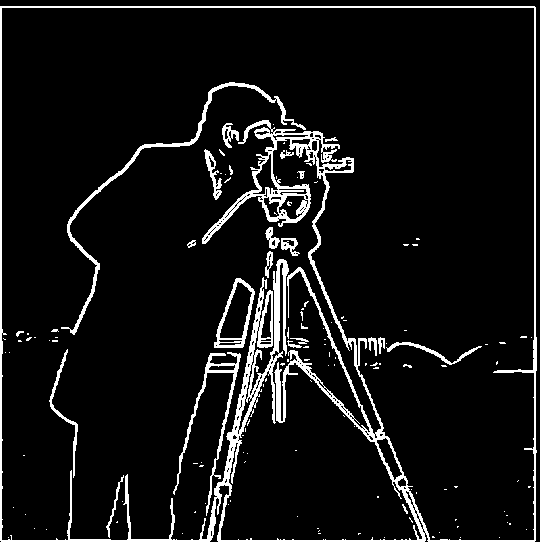

To improve the edge detection, I used a gaussian blur which can be convolved with both the finite difference \(x\) and \(y\) filters to get the difference of gaussian filter. This is useful because it removes the high frequencies/details that might accidently be detected as edges. One difference between the filters is how convolving with a gaussian first makes the edges less sharp. Then the gradient's magnitude is less, and I had to adjust the threshold to be lower. In the previous filter, I used a threshold of \(0.8\), where all values above \(3 * 0.8\) were set to \(1\). In this one, I adjusted the threshold to \(0.5\).

Below on the left is the output using the finite difference filters and one the right is with the difference of gaussian filters. We can see that there is a difference in the bottom of the images. The finite difference filter picks up the high frequencies and details that come from the grass, which blurring the image with a gaussian suppresses these frequencies. So in the difference of gaussian image, the high frequencies of the grass are not captured. Another visible difference is the edge width captured. Since we are blurring the images, it would make sense that locally around an edge, the pixels would pick up a similar gradient as their neighbors.

The same result is achieved whether applying a gaussian blur to the image then finite difference filter or convolving the fd filter with a gaussian filter to obtain first, then applying the image. This is because convolution is associative.